Rona Wang has a strong curiosity for AI-generated images. A recent graduate of the Massachusetts Institute of Technology, Wang has written about the negative impacts of new-age technology. She’s even found that in some cases it can reproduce odd images of people. But after she experimented with another AI program last week, she discovered a bigger concern.

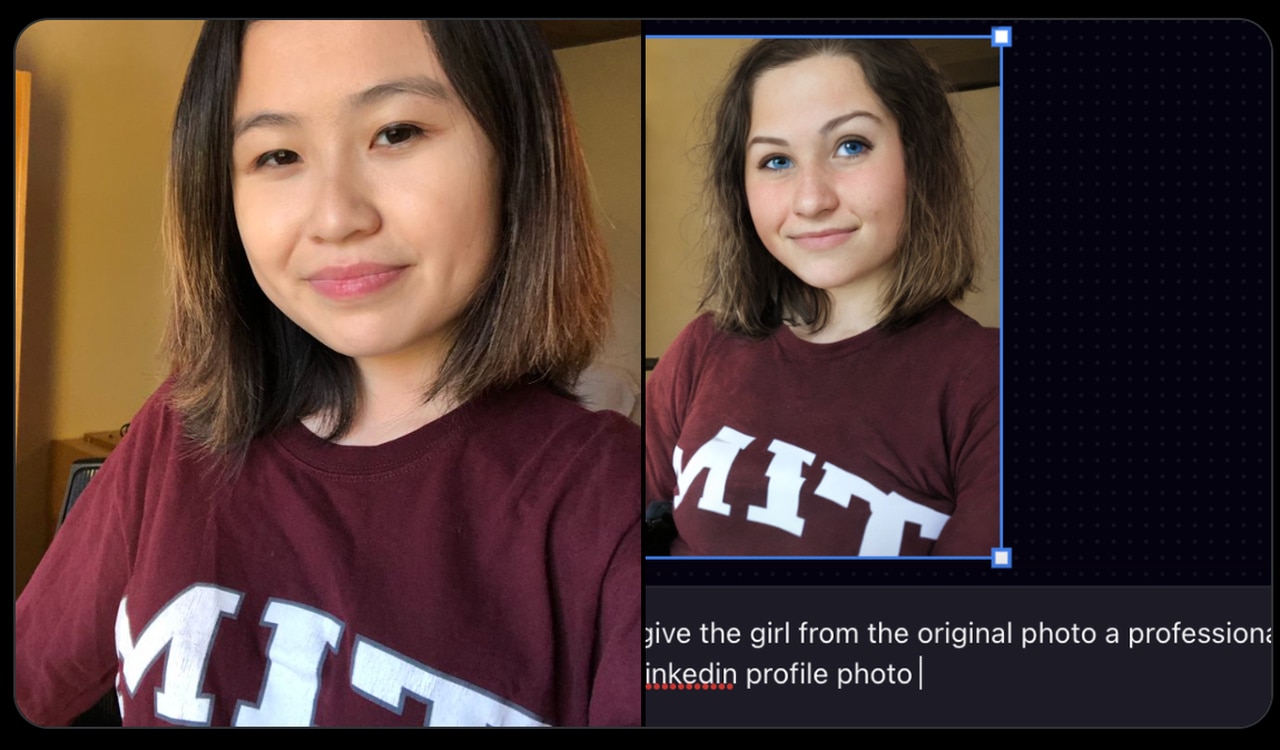

Seeking a new profile picture for her LinkedIn account, Wang sought the help of Playground AI, an AI image generator. Wang, an Asian American woman, uploaded a photo of herself smiling in a maroon-colored shirt to the program on July 14. She asked the AI to make the photo look professional. However, when she received the result, she was surprised to see an image of herself transformed into a white woman with blond-brunette hair and blue eyes.

“My first response was ‘OK, this is like sort of amusing,’ but also ‘Wow, like this is so on the nose like what are they trying to tell me?’ Are they trying to say, ‘Oh, to become more professional you should try to look more white or something like that?’” Wang said in a recent interview with MassLive. “It’s kind of making explicit and what I feel like has always been implicit as a professional – as an aspiring professional in America.”

The tweet, which has garnered over 5 million views, has sparked an online discussion around the ethics – or lack thereof – regarding AI-generated images.

“This is why we have to be wary of handing important tasks (such as in teaching, hiring, medicine, etc.) over to AI tools — unbiased training data is rarely ever used (and may not even exist),” tweeted Druv Bhagavan, a M.D./Ph.D. student at Washington University School of Medicine in St. Louis. “We end up replicating many inequities & biases that contributed to said training data.”

Experts have warned that AI-generated images may be susceptible to perpetuating racial biases and societal stereotypes. If the data used to train the AI technology disproportionately represents certain racial groups or contains biased information, the AI program may inadvertently learn and reproduce those same biased images.

However, Playground AI founder Suhail Doshi said that the program might not be entirely at fault.

“The models aren’t instructable like that so it’ll pick any generic thing based on the prompt,” Doshi replied to Wang’s original tweet. “Unfortunately, they’re not smart enough. Happy to help you get a result but it takes a bit more effort than something like ChatGPT.”

In a separate tweet, the tech founder wrote, “[For what it’s worth] we’re quite displeased with this and hope to solve it.”

Other users have expressed similar views. It’s a fair point, Wang said. However, the MIT graduate believes since the AI platform is intended for consumers there should be clearer instructions on how to get the best result.

“It’s still telling that the fact that I told it something like, ‘give me a professional photo’, and it gave me like a white woman who is blond, which is actually a very small percentage of the population,” Wang told MassLive on Friday. “I think that still indicates something about the bias in AI, and the perhaps like the training data or the bias vector that was used to create this software and I still think that that’s something that’s worth talking about.”

Shortly after her tweet went viral, Wang received a message from Doshi, expressing his desire to help her get a better AI image. She replied stating her eagerness to learn more about the technology. But as of Friday, Wang has still not heard back.

As for now, Wang said she hopes that AI technology will improve to be more public and inclusive for people of all backgrounds. When asked if she’ll continue using AI images, Wang said she was unsure.

“I don’t know,” she said. “Maybe I’ll just pay a professional photographer to take a photo of me.”